Artificial Intelligence in Automotive and Aerospace Applications - Reliability Challenges

Paolo Rech

AI for autonomous vehicles: an overview

Deep Learning is more and more pervasive in our daily lives, with the number of AI-based applications sharply increasing and the deployment of “intelligent” systems becoming ubiquitous. There are several novel technologies that are enabled by machine learning, ranging from diagnosis of malignancies, to automatic predictive maintenance of industrial machines, to fully autonomous vehicles. This latter application is of particular interest, since autonomous cars are expected to reduce the number of car accidents of more than 3 orders of magnitude. Moreover, autonomous vehicles are expected to burst deep space explorations, removing the latency of sending control signals from the Earth.

Despite the potential great benefit of autonomous vehicles, it is still largely unclear if (and at which level) we can rely on AI. In other words, we must guarantee that the neural network has properly learned how to detect/classify objects and how to make the best decision with the information at hand. This is definitely not an easy task, considering the complexity of both the software and the hardware necessary to run deep learning applications. Currently, self-driving systems are not yet compliant with ISO26262 dependability requirements to be adopted in large-scale [1] and are not yet sufficiently reliable to be part of a space mission. In particular, object detection, a critical task in autonomous vehicles, has been demonstrated to be highly undependable [2] and to be responsible for the great majority of accidents in current self-driving cars prototypes [3] . The news regarding insufficient dependability has the side effect of making the general public skeptical about the large-scale employment of self-driven vehicles and hesitant about the possibility of traveling in an autonomous car.

Hardware and Software Reliability Issues

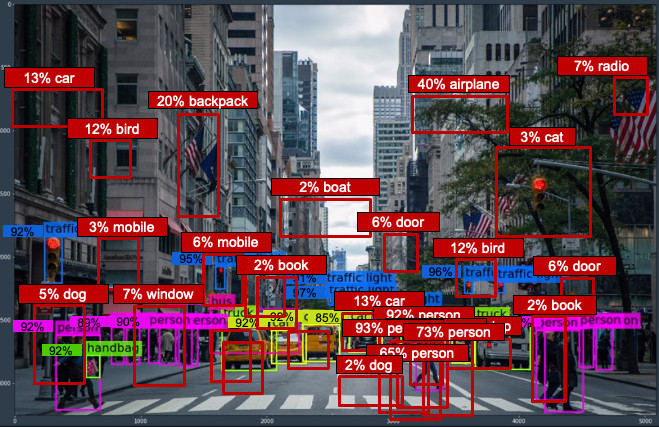

An important, and challenging aspect of neural networks is that their output is not deterministic, but probabilistic. The convolutional neural networks (CNNs) detect/classify objects, providing a probability for the detection. It is then the downstream system that defines a threshold probability over which the object is actually detected. The detection probability and accuracy strongly depend on the training process of the CNN and it is highly complicated to predict how the CNN will behave in never-seen situations.

Figure 1: the filtered output of a CNN (left), and the possible output of a CNN (right).

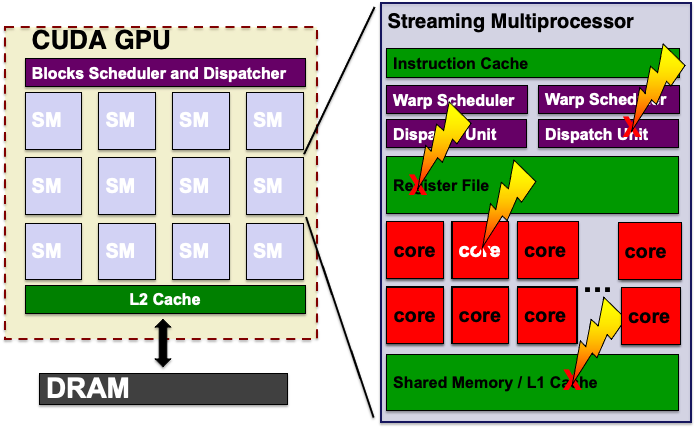

Even if the software to detect objects was perfect, it still needs a huge computing power to be executed. Hundreds of filters need to be convolved in the input frame to extract features used for object detections. In a moving car these filters must be processed in real time, ensuring the execution of object detection in at least 40 frames per seconds. This limited time budget forces the use of highly parallel and complex hardware devices to execute CNNs, such as Graphics Processing Units (GPUs), Field-Programmable Gate Arrays (FPGAs) or dedicated accelerators as the Tensor Processing Units (TPUs). Additionally, the real-time constraint significantly reduces the possibilities to implement reliability solutions based on replication. For space applications, on the contrary, there is not such a constraint since the reaction time of the spacecraft can be longer. This makes the design of a reliable self-driving vehicle possibly easier for space than for terrestrial applications.

A key reliability issue when dealing with parallel and complex hardware is that one single fault, such as the ones generated by the impact with ionizing particles, can affect the computation of multiple threads. On a GPU, for instance, a single fault in the scheduler or in the shared memories is likely to affect the computation of all the parallel processes being executed in the device, as shown in Figure 2. To put this in perspective, the difference between one and multiple processes corruption is the difference between one pixel and multiple pixels corrupted. If a single pixel is corrupted the neural network is likely to still be able to correctly detect the objects while if a bunch of pixels are corrupted we could expect misdetections. In the latter case the self-driving vehicle is likely to modify its behavior, with unexpected consequences.

Figure 2: the effect of single faults in critical points of a parallel architecture such as the GPUs one.

Figure 3: tolerable and critical errors for CNNs.

AI Reliability Characterization

The complexity of the algorithm and of the hardware devices combined with the probabilistic nature of the output makes the measurement and the understanding of the radiation-induced error rate of AI systems used in autonomous vehicles extremely challenging.

A CNN for object detection is composed of hundreds of layers of different nature. It has convolution layers that extract features, MaxPool layers that reduce the resolution of the image, fully connected layers that elaborate the processed information, etc. A fault in each layer and in each operation of each layer can have a different impact on the output correctness. To make the matter worse, the fault effect depends on the frame that is being processed. As a result, even considering just the software, it is hard -if not impossible- to fully cover all the possible fault locations and fault impacts. The underlying hardware reliability characterization is not easier. We are talking about parallel architectures with thousands of processing units, hundreds of thousands functional units, billions of transistors. A fault in each of these transistors can have a different impact on the software correctness. A single bit flip in a register is likely to corrupt a pixel of a feature map while a single bit flip in the scheduler is likely to modify the execution of thousands parallel operations, completely modifying the stream of data. A full and accurate characterization of such a device is an herculean effort. As a side note, the hardware devices necessary to run CNNs in real time are typically commercial chips originally designed for gaming or high performance computing applications, with totally different reliability requirements compared to a self-driving vehicle. As a result, the chip designers have urged improvements to their computing architectures reliability with -we must acknowledge- impressive results. The error rate of GPUs and CPUs for CNNs have been reduced by orders of magnitudes in the last decade.

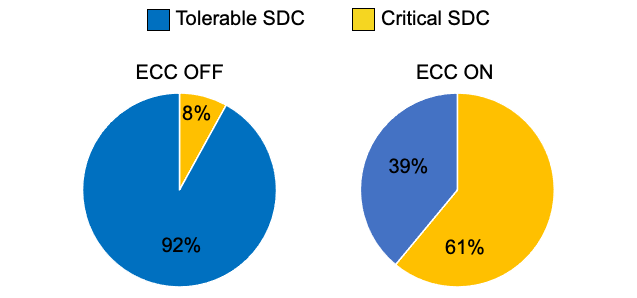

We have focused our research about reliability of AI frameworks on the distinction between a tolerable and a critical error. As shown in Figure 3, as long as the error still allows a sufficiently good detection we can mark it as tolerable. Only when the object is missed or when we have a false positive we need to consider it as a critical error. Our intuition is that not all the faults that happen in the hardware modify the AI framework computation (some are masked) and not all the faults that reach the AI framework output are critical. A modification of just some millimeters in the position of an object is not as critical as seeing an unexisting elephant in front of the vehicle.

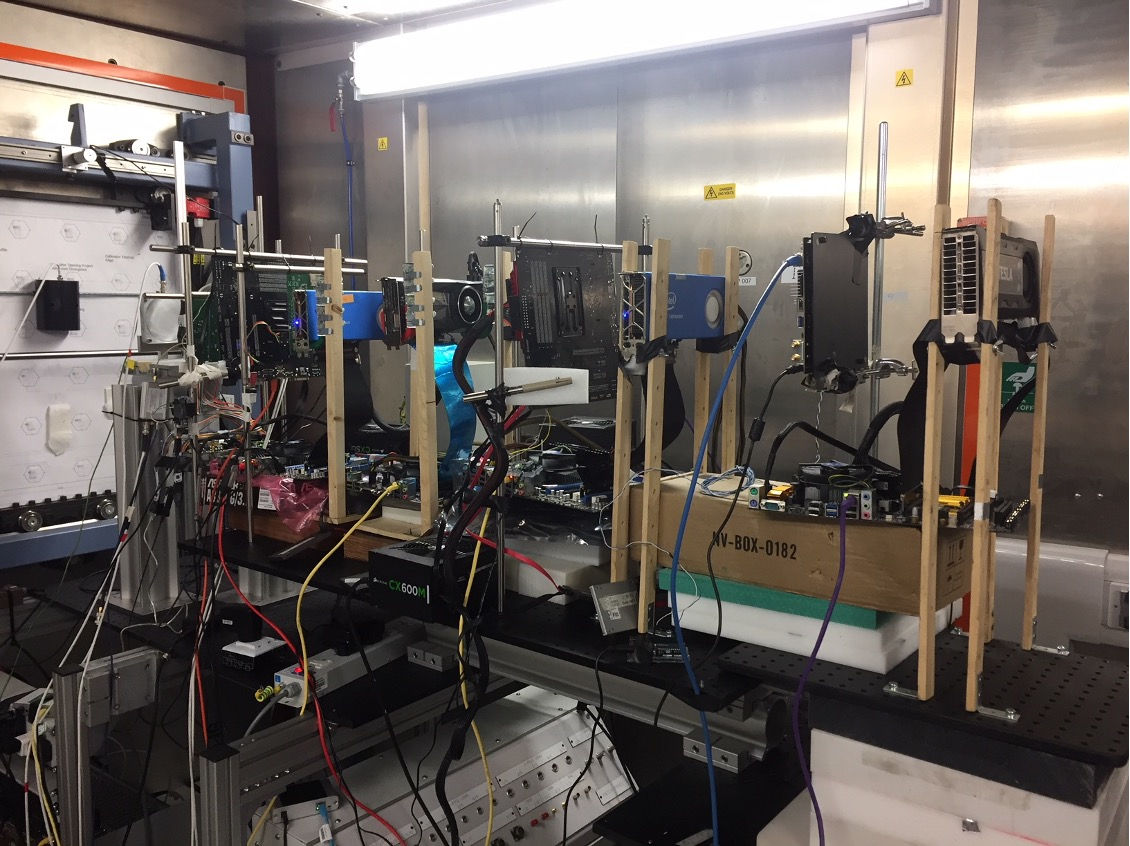

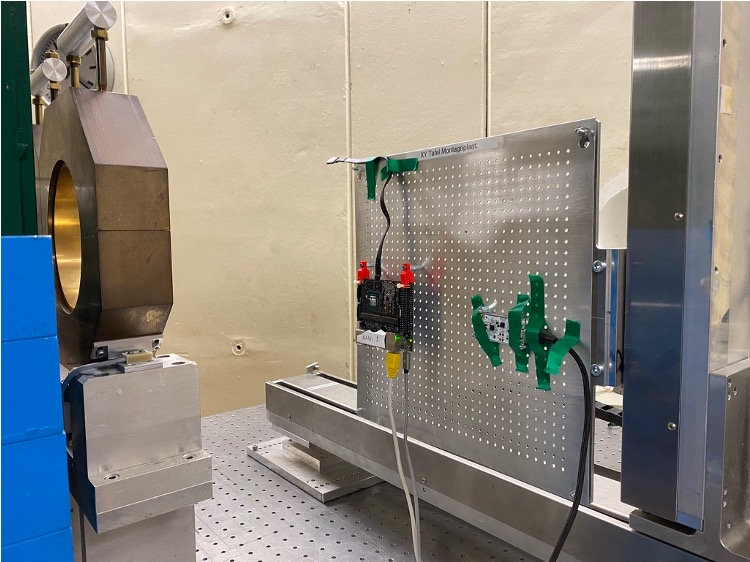

To understand the reliability of AI frameworks, we have exposed to neutrons and heavy ions GPUs, TPUs, CPUs, FPGAs executing CNNs. It worth noting that, to be tested with heavy ions, the devices need to be prepared to ensure that particles interact with the Silicon active area. Most of the tested devices are flipped-chip and in some facility (such as RADEF and UCL) in which the penetration of particles is small, de-lidding is necessary. A good aspect of these devices is that they are fully controlled remotely using ethernet connection. Thus, even if the device must be put in a vacuum chamber for the heavy ion test, the setup is relatively easy. Figure 4 shows the setup mounted at ChipIR (neutrons) and at PARTREC (heavy ions). The extraordinary amount of beam time devoted to this characterization has been granted by ChipIR and EMMA facilities at the ISIS neutrons and mouns source of the Rutherford Appleton Laboratories, in the UK, by the LANSCE facility of the Los Alamos National Laboratories, in the USA, by the Institut Laue-Langevin in France, and by the PARTREC facility of the University Medical Center Groningen, in the Netherlands thanks to the RADNEXT project.

Figure 4: experimental setup at ChipIR (left), and setup at PARTREC (right).

We have tested various NVIDIA GPU architectures (Kepler, Volta, Ampere), AMD and Intel CPUs, Raspberry Pis, and low-power EdgeAI chips (NeuroShield and Google’s TPU). We run, in each of these devices, CNNs for object detections, using as input a subset of the data set used to train the network and validate its accuracy. The selection of the frames is fundamental, as testing with all the thousands of frames would be impossible and choosing biased frames (no object to detect) would result in unrealistic evaluations.

In Figure 5 you can see the percentage of Silent Data Corruption (SDC) observed running YOLO CNN on a GPU that was critical (misdetection) or tolerable (detection is unaltered) observed during neutron beam experiments. When ECC is OFF (i.e., memory is not protected), the vast majority of the observed errors is tolerable, while when ECC is ON (i.e, only errors in logic propagate to the output) the majority of SDCs modifies detection. This means that errors in memory might not be as critical as errors in logic for CNN executions. To have a good reliability evaluation and an effective hardening of CNNs it is mandatory to focus on logic errors. Obviously when ECC is OFF there are much more SDCs observed (about 1 order of magnitude more) than when ECC is ON. However, these errors might not be critical and could be tolerated by CNNs.

Figure 5: critical and tolerable errors in a GPU running YOLO CNN with ECC OFF (left) and ON (right).

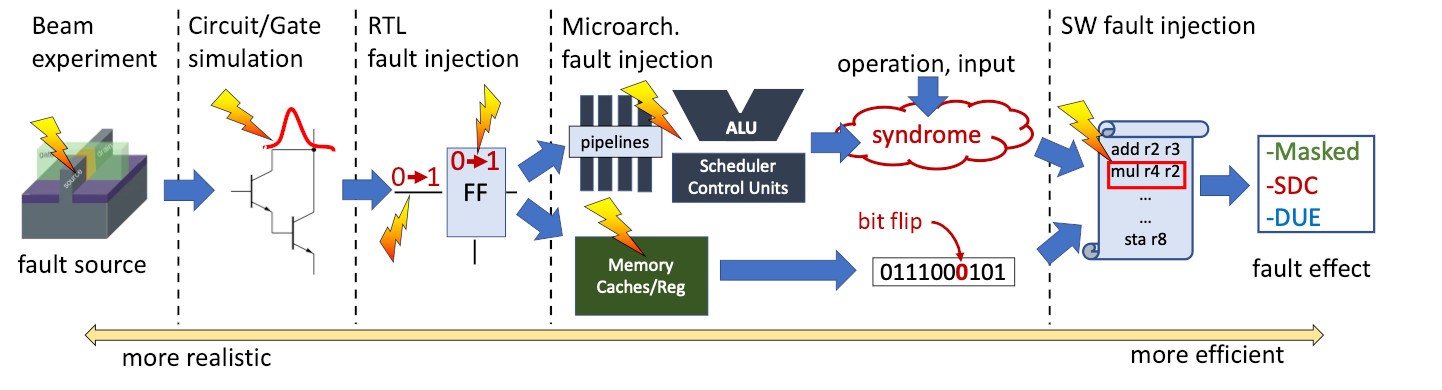

To have a more detailed understanding of the fault propagation we have looked at fault injection at different levels of abstractions. The hardware fault originates at the hardware level, with the interaction of the particle with the silicon transistor. This fault is then eventually propagated to the circuit level and can modify the computing system behavior. If the fault affects a memory location we know that it can flip one or more bits. However, when the fault modifies the logical units that execute the instruction (pipeline registers, ALU, scheduler) the effect of the fault on the operation output is hard to predict. In other words, the way the fault is manifested (i.e. the fault model) is naive for memory but very complex for logic. If the reliability evaluation is performed at a very low level it is expected to be highly realistic, but is very costly. Fault injection at higher levels of abstraction is cheaper but, using synthetic fault models, risks to be unrealistic, especially for logic errors.

Figure 6: fault propagation in the hardware-software stack.

Figure 6 illustrates the different evaluation methodologies. In general, methodologies that act closer to the fault physical source (i.e., the silicon implementation) are more realistic (and costly in terms of processing time) while methodologies closer to the output manifestation of the fault are more efficient (but less realistic in terms of the fault effect in real applications). For parallel devices (and GPUs in particular) executing CNN the challenges of each reliability characterization are exacerbated by the hardware and software complexity.

Beam experiments induce faults directly in the transistors by the interaction of accelerated particles with the Silicon lattice, providing highly realistic error rates

[4]

.

Software fault injection is performed at the highest level of abstraction and, on GPUs, it was proved efficient in identifying those code portions that, once corrupted, are more likely to affect computation

[5]

[6]

. However, the analysis is limited as faults can be injected only on that subset of resources which is visible to the programmer. Unfortunately, critical resources for highly parallel devices (i.e., hardware scheduler, threads control units, etc.) are not accessible to the programmer and, thus, cannot be characterized via high level fault injection. Additionally, the adopted fault model (typically single/double bit-flip) might be accurate for the main memory structures (register files, caches) but risks to be unrealistic when considering faults in the computing cores or control logic. In fact, as shown in Figure 6, while a fault in the memory array directly translates into a corrupted value, the single transient fault in a resource used for the execution of an operation (pipelines, ALU, scheduler, etc..) can have not-obvious effects on the operation output.

Micro-architecture fault injection provides a higher fault coverage than software fault injection as faults can, in principle, be injected in most modules. One of the issues of micro-architectural fault injection in GPUs is that the description of some modules (including the scheduler and pipelines) is behavioural and their implementation is not necessarily similar to the realistic one.

Register-Transfer Level (RTL) fault injection accesses all resources (flip flops and signals) and provides a more realistic fault model, given the proximity of the RTL description with the actual implementation of the final hardware. However, the time required to inject a statistically significant number of faults makes RTL injections impractical. The huge amount of modules and units in a GPU and the complexity of modern HPC and safety-critical applications exacerbate the time needed to have an exhaustive RTL fault injection (hundreds of hours for small codes), making it unfeasible. Previous work that evaluates GPUs reliability through RTL fault injection can be performed using a two-level fault-injection strategy

[7]

.

Circuit or Gate Level Simulations induce analog current spikes or digital faults in the lowest abstraction level that still allows to track fault propagation (not available with beam tests). There are two main issues with the level of details required to perform this analysis on GPUs: (1) a circuit or gate level description of GPUs is not publicly available and, even if it was, (2) the time required to evaluate the whole circuit would definitely be excessive (the characterization of a small circuit takes weeks).

The approach we have designed to have an accurate and realistic evaluation of the reliability of CNNs is to combine the fine grain evaluation of RTL fault injection with the flexibility and efficiency of software fault injection and the realistic measurement provided by beam experiments in real GPUs [7] [11] . We have updated a software framework (NVBitFI) to inject the fault models that come from our RTL and beam analyses (rather than a simplistic fault model as all previous works on GPU software fault injection do). The high speed of software fault injection allows us to observe the effect of fault syndromes in the execution of real-world applications, while the few previous works on GPU RTL fault injection are limited to naive workloads.

Can we rely on AI?

The fundamental question we are trying to answer is whether we can rely on AI to guide our vehicles. Are we ready to put our car (and our lives) in the hands of a neural network? Probably not yet. Besides radiation-induced soft errors, accuracy problems, software and hardware design can indeed cause dangerous misdetections.

There are various solutions to mitigate the effects of faults. Redundancy is one of the most effective reliability solutions. However, it does not apply well to CNNs and AI in general. In fact, as we have seen most of the faults do not impact detection and, since duplication will duplicate each instruction or module, it will not be efficient. Given the real-time constraints of object detection we cannot afford to duplicate all instructions and the cost of duplicating the hardware can be too high, mostly for the automotive market. The idea to guarantee an effective and efficient hardening solution for AI is to understand the fault origin and propagation through the whole abstraction stack. Knowing which faults are more likely to generate misdetection allows to apply the hardening technique only where it is strictly necessary. Lately, various hardening solutions have been proposed with the aim of reducing the impact of transient faults in DNNs. Algorithm-Based Fault Tolerance (ABFT) [2] [8] , filters to detect propagating errors in MaxPool layers [2] and (selective) replication with comparison [9] [10] have been shown to significantly increase DNNs reliability. The big open question regarding the hardening of AI is related to the challenges of its reliability evaluation. How can we ensure that the result is correct if even in the absence of faults the output of the framework is probabilistic? We need to face the fact that the frameworks for object detection are not finalized, yet. The reliability evaluations the community is performing are based on prototypes and evolving software and hardware. A good reliability evaluation and a useful hardening solution should then be as generic as possible, so as to be applied (and be effective) also in future AI frameworks.